导读:目前完全专注于云原生的paas平台建设上,为了进一步了解k8s并且方便debug,因此对kubelet的源码进行初步分析和学习。其实网上分析kubelet的文章已经蛮多了,但是不过一遍代码,会给人仅仅背书的印象。这里主要简单梳理kubelet的启动过程和syncLoop过程,kubelet的各个组件可以后面单独分析和整理。

启动过程

kubelet的启动入口函数和其他组件一样,放在cmd/kubelet/kubelet.go下:

1 | func main() { |

通过调用NewKubeletCommand来创建cobra的一个command对象,在该对象Run方法中主要做了三件事:通过传入command的参数和配置文件传入kubelet所需的配置信息,初始化kubeletDeps(指明kubelet所依赖的组件),然后调用Run创建并启动kubelet:

1 | // NewKubeletCommand creates a *cobra.Command object with default parameters |

Run中仅仅调用了run,所以直接查看run函数,run主要干了以下几件事:

- 初始化一下基本配置,包括kubeclient,eventclient,heartbeatclient,ContainerManager…;

- 通过

PreInitRuntimeService初始化CRI,创建容器和执行kubectl exec的流式Server都是在这里初始化; - 通过

RunKubelet初始化kubelet所有依赖的组件,获取docker的配置文件路径,并且运行kubelet;

1 | func run(s *options.KubeletServer, kubeDeps *kubelet.Dependencies, featureGate featuregate.FeatureGate, stopCh <-chan struct{}) (err error) { |

接下来重点说一下RunKubelet,它一个是会通过createAndInitKubelet来实例化一个kubelet(包含各种manager和module的注册),再通过startKubelet通过Kubelet.Run启动kubelet以及其注册的所有manager和module:

1 | func RunKubelet(kubeServer *options.KubeletServer, kubeDeps *kubelet.Dependencies, runOnce bool) error { |

syncLoop过程

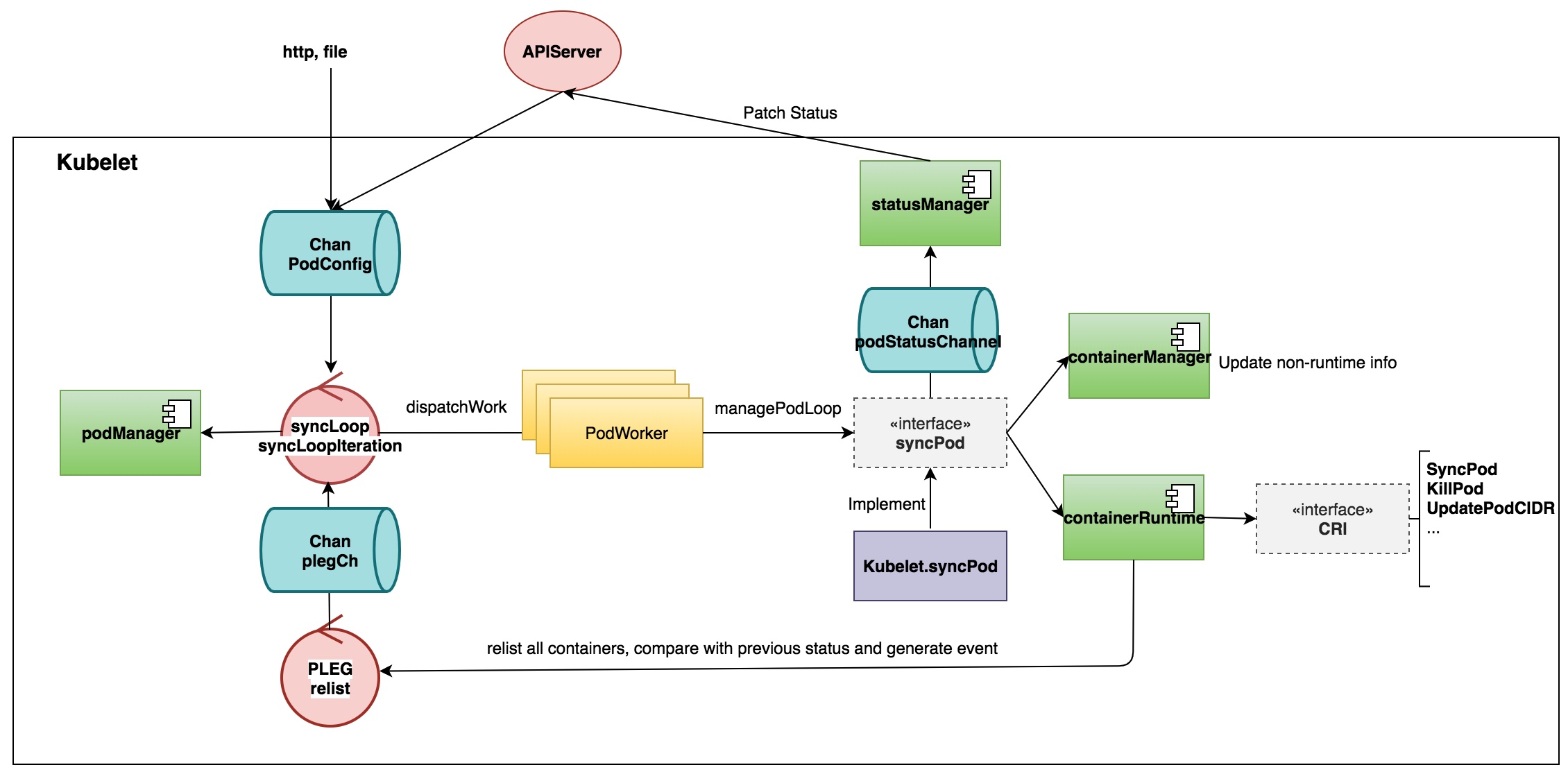

从Kubelet启动的Kubelet.Run最后一行代码,kl.syncLoop(updates, kl),跳进去我们就可以看到kubelet最核心的syncLoop过程,也就是更新pod消息处理过程。这里就直接画图来解释了。

- 用户从http,静态文件以及APIServer对pod的修改通过

PodConfigchannel传递到syncLoop; syncLoop的syncLoopIteration从PodConfig中取出update的内容,一方面会通过podManger里更新pod状态,另一方面会通过dispatchWork将更新内容通过PodWoker更新pod状态,调用的是syncPod这个接口(由Kubelet.syncPod实现);- 而

syncPod这里通过podStatusChannelchannel更新状态到statusManager, 再patch Status到APIServer; syncPod一方面通过containerManager更新non-runtime的信息,例如QoS,Cgroup信息;另外一方面通过CRI更新pod的状态(对于更加详细的pod操作过程主要通过研究Dockershim,或者其他shim就可以搞清楚了);- 另外一方面,

PLEG会周期(默认1s)通过relist从CRI获取所有pod当前状态并且跟之前状态对比产生Pod的event发送到syncLoop;

小结

本文对kubelet的启动过程进行了学习和总结,并且简单介绍了一下syncLoop的处理流程。大概清楚了kubelet的代码结构,后面可以针对CRI,CSI以及Device Plugin等内容进行研究。